Machine Learning for Large Scale Code Analysis

for the KTH TCS Seminar series

on March 26, 2019

by Hugo Mougard

Talk Plan

- Introduction

- Overview of source{d} stack

- Latest ML team project

Introduction

Introduction

source{d}

- ~40 employees, full-remote

- ML team: 7 employees

- Open-core company

- Goal: make ML on Code easy

Introduction

ML on Code

Harder than Computer Vision, NLP

- Less tooling

- Fewer models

- Less visibility

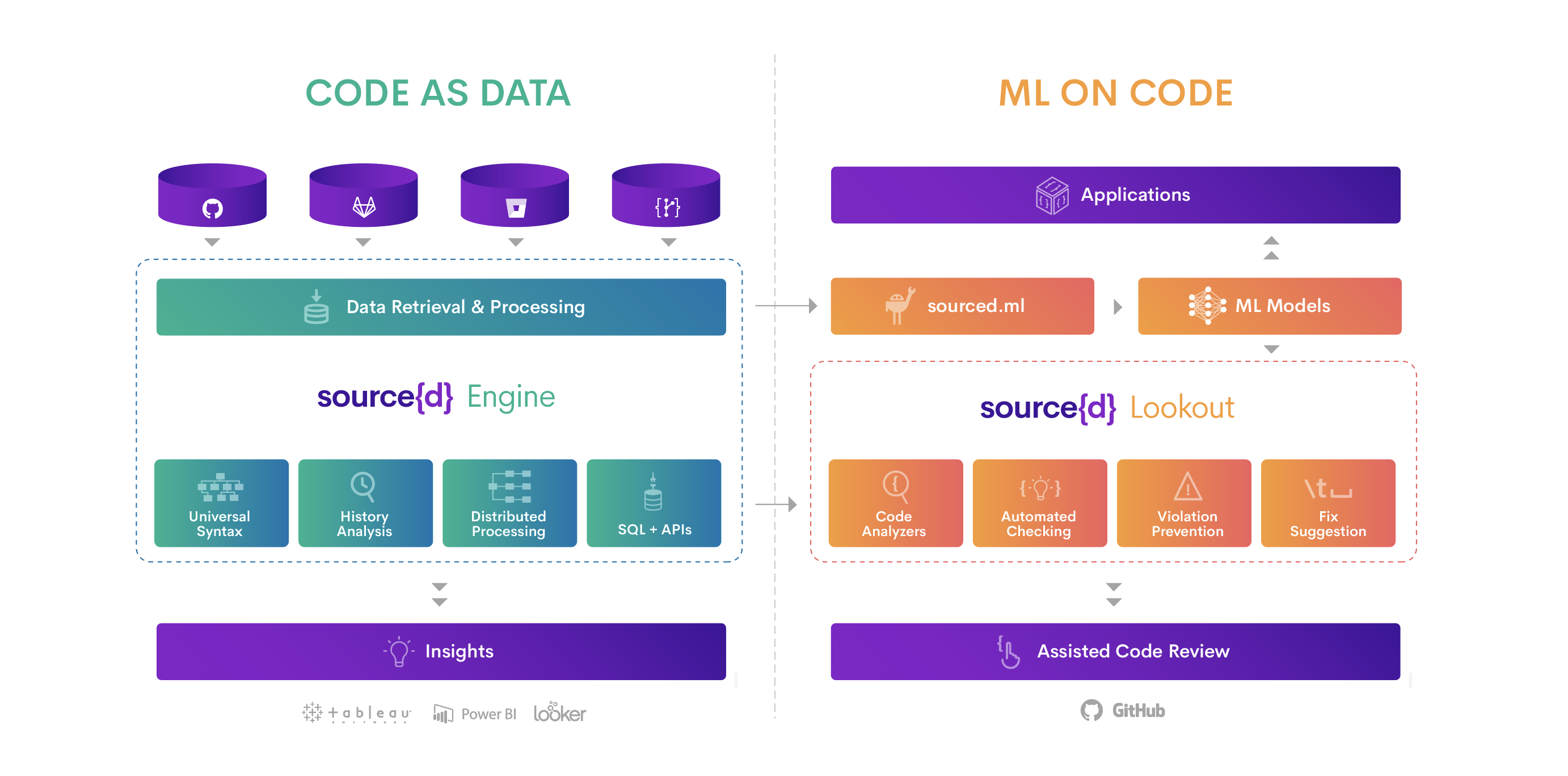

Stack Overview

Code as Data

Code as Data

Overview

3 sub-problems

- Data Retrieval

- Data Processing

- Language Analysis

Code as Data

Data Retrieval

2 projects

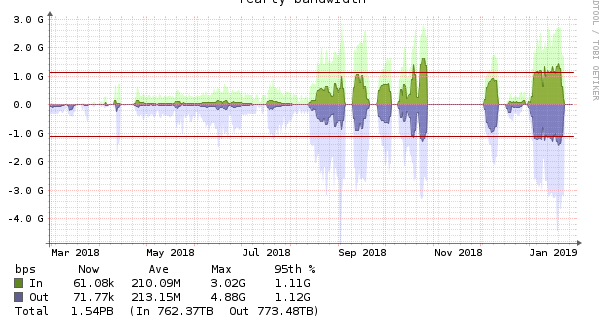

Code as Data

Data Retrieval

Some gory details

- 51M repositories downloaded

- 26M siva files

- 47M left to go

- ~500TB total size

- cluster with 2k threads, 11TB RAM and 1PB storage

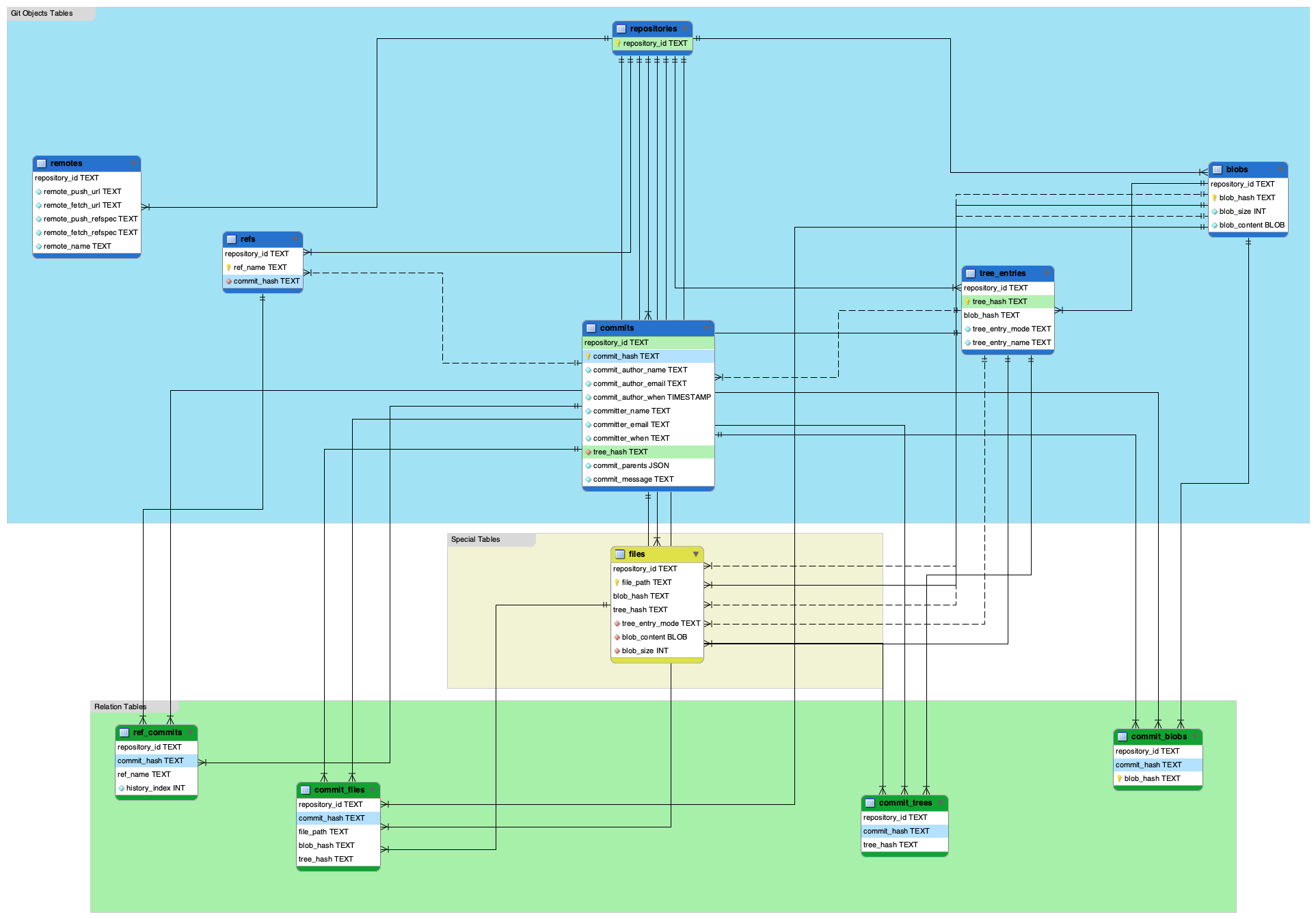

Code as Data

Data Processing

-

gitbase - Expose git repos as SQL databases

Code as Data

Language Analysis

-

bblfsh - Turn language specific ASTs into UASTs (Universal ASTs)

Code as Data

Universal ASTs Specification

Types shared across languages

- Identifier

- String

- QualifiedIdentifier

- Comment

- Block

- …

Code as Data

Supported Languages

- Bash

- C++

- C#

- Go

- Java

- JavaScript

- PHP

- Python

- Ruby

- TypeScript

Code as Data

Demo time 😎

Machine Learning on Code

Built on Code as Data

-

apollo - Duplicate detection

-

tmsc&snippet-ranger - Repository topic modeling

-

id2vec - Identifier embedding

-

ml - Vendor/garbage detection

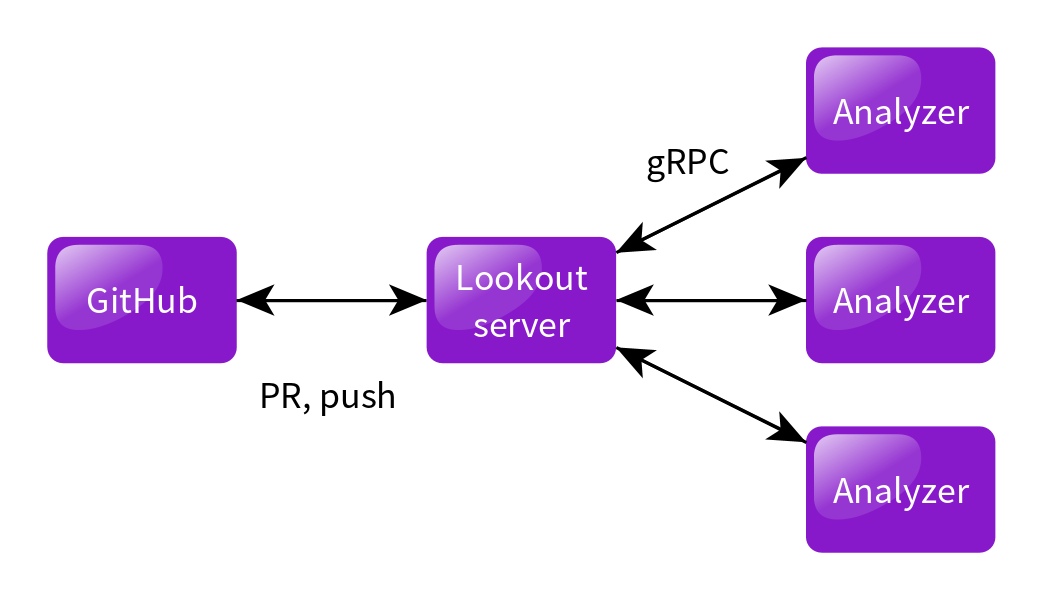

Assisted Code Review

Assisted Code Review

Lookout

Latest ML Team Project

Latest ML Team Project

Assisted code review

3 targets initial targets

- Formatting

- Typos

- Best practices

Latest ML Team Project

format-analyzer

Goal: automate formatting

- Model existing style in style repositories

- Apply modeled styles to new code

Latest ML Team Project

Design choices

Must explain false positives

OR

Must not have false positives

Latest ML Team Project

First experiment

Unsupervised learning with explainable rules

- Learn a tree model on existing code

- Transform the tree to rules

- Apply modeled styles to new code

Latest ML Team Project

Problem statement & Features

Latest ML Team Project

Model

- Decision tree or random forest

- Bayesian Process hyper-parameter optimization

- Rule = conjunction of conditions on a branch

- Condition filtering

- Prediction filtering (constant UAST invariant)

Latest ML Team Project

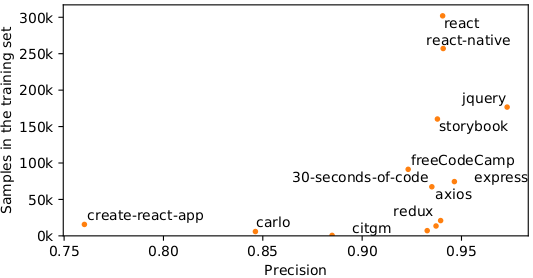

Evaluation

Reproduction task: ~94.3% precision

Latest ML Team Project

Ways to improve

- More expressive model

- Autoregressive model

- Use more data than a single repository to train

Latest ML Team Project

Second experiment

Ongoing, Early stage. Goal: use expressive models

- Generative model AST → Code

- Meta-learning on numerous repositories

Latest ML Team Project

Problem statement

Predict formatting characters before each leaf of the AST

- Inter-leaves formatting

- Sequence prediction

Latest ML Team Project

Model

- GNN encoder

- RNN decoder for each leaf

- Property: short dependencies

- Property: more autoregressive

Latest ML Team Project

Training

Supervised learning?

- On 1 repo: can be small

- On many repos: not consistent style

- But model is expressive: more data is good

Latest ML Team Project

Meta-learning

2 steps

- Learn how to learn style on many repositories

- Learn style on a specific repository

- Property: can use data

- Property: can model small repos

Latest ML Team Project

Multi-task Learning

Each repository is considered as a task $\mathcal{T}_i$

$$\min_\theta\sum_{\mathcal{T}_i \sim p(\mathcal{T})} \mathcal{L}_{\mathcal{T}_i}(f_{\theta})$$Latest ML Team Project

Meta-learning

Model-Agnostic Meta-Learning approach

Minimize loss AFTER having optimized for a task $\mathcal{T}_i$

$$\min_\theta\sum_{\mathcal{T}_i \sim p(\mathcal{T})} \mathcal{L}_{\mathcal{T}_i}(f_{\theta'_i})$$$\theta'_i$ is optimized from $\theta$ on $\mathcal{T}_i$ at each iteration

Latest ML Team Project

Results

For the next seminar!

Conclusion

- Lots of Open Source Software: sourced.tech

- Git made easy with SQL interface

- Universal ASTs

- Fun with tree & meta-learning

Thank you for your attention!

Questions & Discussion

Hugo Mougard <hugo@sourced.tech>